Make data in your enterprise do more for less

Read time: 4 minutes 20 seconds

Data fabric is the most promising and hottest trend in the data and analytics world

Data mesh — the new paradigm

Lakehouse brings the best of data lake and data warehouse

These are some trends from Gartner’s Hype Cycle for Data Management, 2021. For a long time, data enthusiasts only knew two streams of thoughts — the third normal form (3NF) enterprise data warehouse (EDW) by Bill Inmon and data mart-based enterprise bus architecture by Ralph Kimbal.

Once the enterprise needs started to outgrow the capabilities offered by both the EDW and data marts, the industry started searching for new approaches. Soon enough, big data was the hottest thing, and everyone jumped on the bandwagon without much thought. It was very easy to onboard data, and it was faster and cheaper. (Note: It was not cheaper from a total cost of ownership (TCO) standpoint, but that was how it was initially marketed). Enterprises could ingest a vast amount of data and had an enormous capacity to process terabytes of data, first with the help of map/reduce and then with Spark. But these big data lakes became white elephant projects and were too long to execute, too cumbersome to consume from, and difficult to govern. Benefits offered by data lake served some enterprise users, not all.

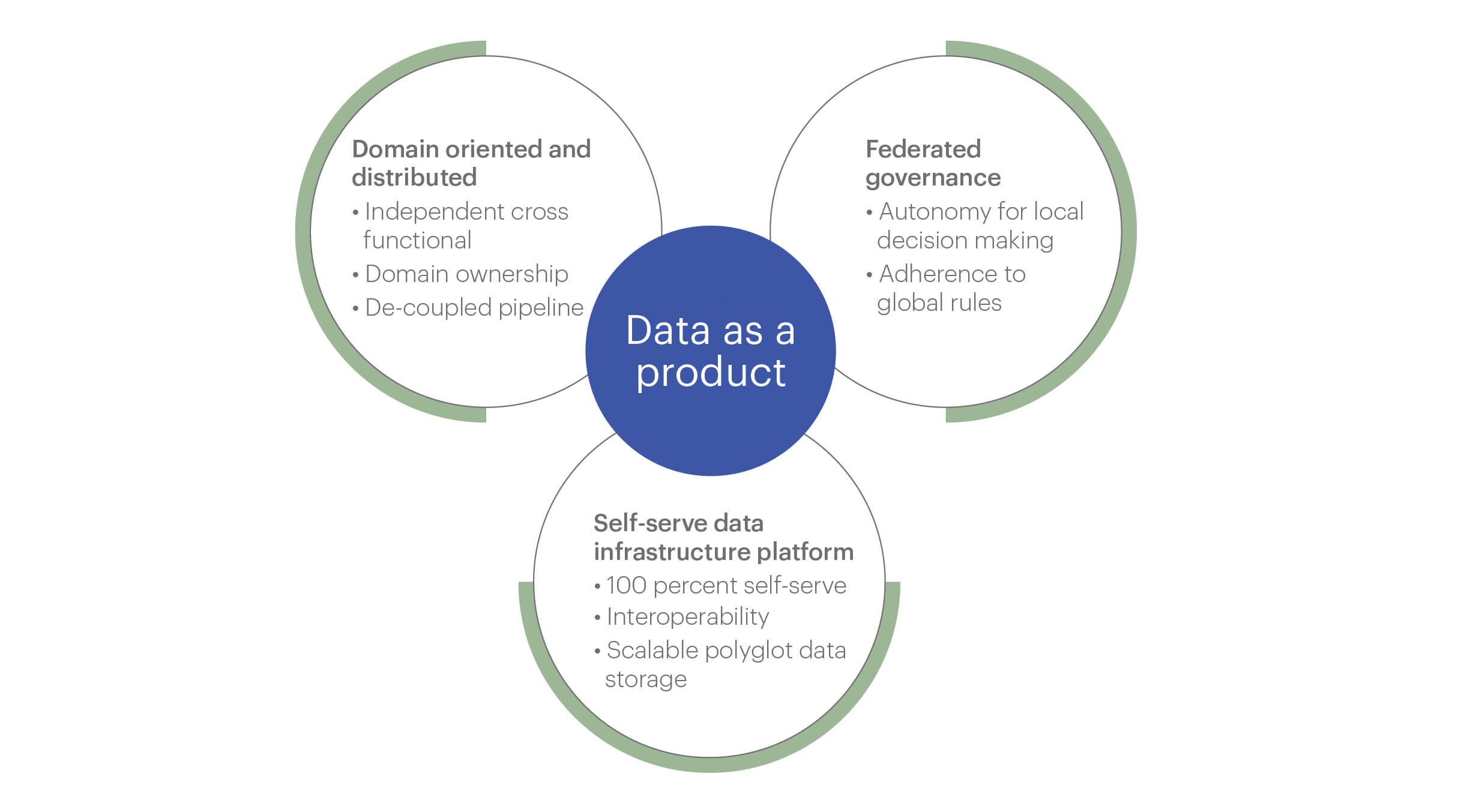

We want to provide this perspective before talking about data mesh. It’s essential to understand why data mesh architecture is relevant and timely for today’s data and analytics world. Data mesh, a term coined by Zhamak Dehghani, is a network of distributed data products linked together that follow findable, accessible, interoperable, and reusable (FAIR) principles. It operates on the following four propositions:

- Domain-oriented decentralized data ownership and architecture

- Data as a product (DaaP)

- Self-serve data infrastructure as a platform

- Federated computational governance

In this blog, we will further explore data as a product. A data product is a node in the mesh that includes code, data, metadata, and infrastructure. Data as a product principle in data mesh addresses the high cost of discovering, understanding, trusting, and using quality data. As teams from multiple domains are involved in data mesh, data as a product principle is vital to address data quality and dark data (information produced by the organization but generally not used for analytical or other purposes).

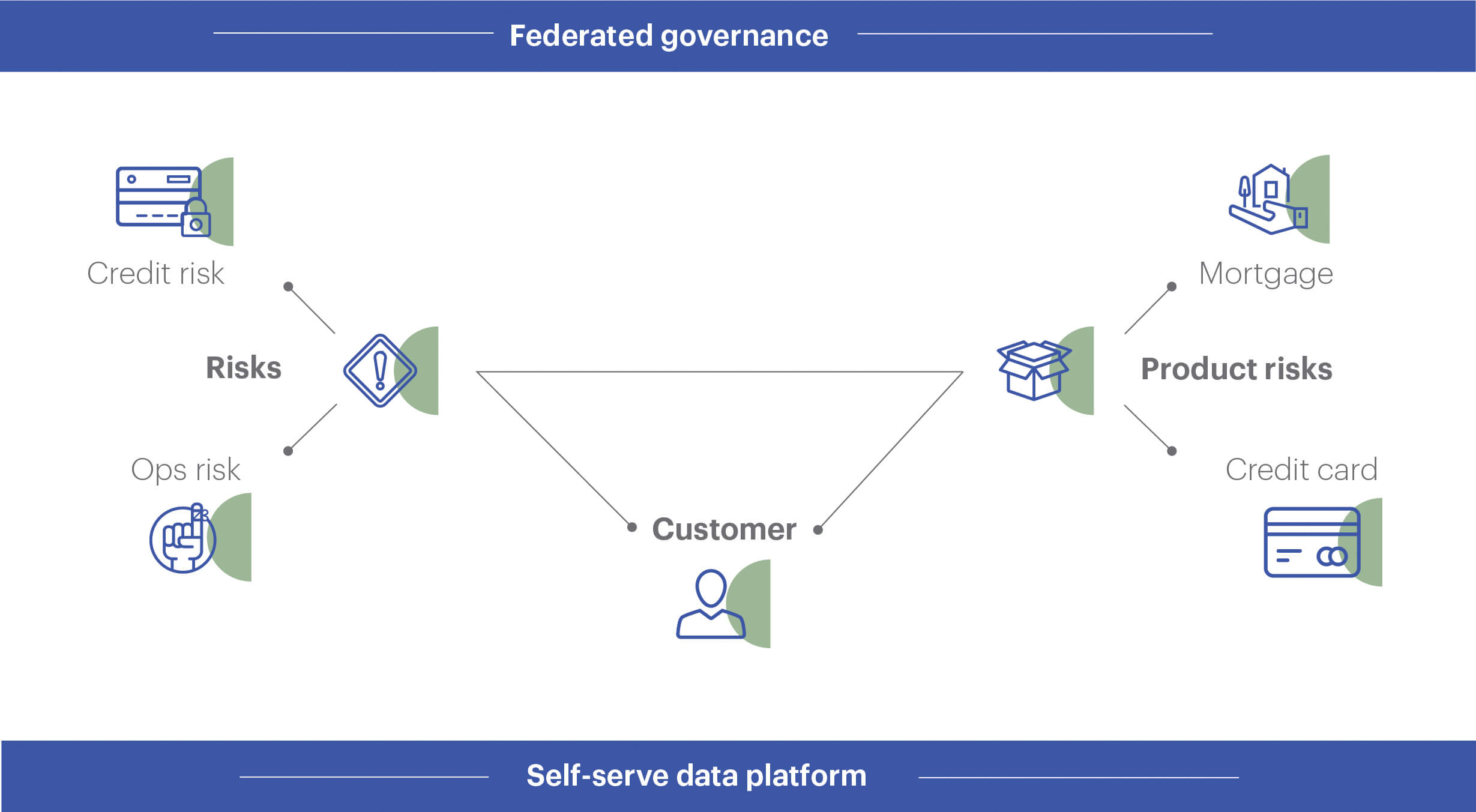

Data as a product results from applying product thinking into datasets, ensuring they have a series of capabilities, including discoverability, security, understandability, trustworthiness, etc. DaaP has been in practice for many decades and is most commonly followed by companies in some way. The data team collects, cleans, integrates, and makes data available to consuming teams creating a service or product. For example, making customer data available for the marketing team to run campaigns for specific customers who are most likely to buy the product. The risk team can use the same data to identify potential fraud or by the operations team to provide services to customers. Bloomberg financial data is one of the most common and successful examples of data as a product. Within each enterprise, you can find many such examples.

DaaP principle in data mesh mentions specific capabilities DaaP should demonstrate:

Discoverable

A search engine is needed for data as a product to be discoverable. Users must register datasets in the search engine and request access to them (this increases security, another capability explained below). The first iteration for this capability could be a list of datasets in the internal intranet from which you can iterate and build incrementally.

Addressable

Addressable datasets make your teams more productive. Data analysts and data scientists can autonomously find and use the data they need while data engineers have fewer interruptions to find data.

Trustworthy

Checking data quality regularly and automatically is essential to fulfilling the trustworthy characteristic of data as a product. Datasets owners need to react accordingly to the results of such quality checks. Quality checks must be done at pipeline input and output. Contextual data quality information such as Tableau dashboards can be provided to data consumers.

Understandable

Data users (analysts, data scientists, consuming applications) need to understand data easily. It has to be explained with sample data sets.

Interoperable

Datasets need to contain metadata that makes them understandable while following the same naming conventions to make the datasets interoperable.

Secure

The use of data security for data access and approval should be mentioned upfront.

If you start thinking of data as a product, you can make the data in your enterprise do more for less. Let’s take the example of customer data. When customer data is a product, it will have the following capabilities to meet the DaaP principle:

-

Have definitions for all its attributes (name, email, address, preferences)

-

Where are the attributes sourced from, and who owns them (authorized source for specific attributes and ones responsible for creating, modifying, and deleting)?

-

Who certifies that the data is correct (data steward)?

-

What is the mechanism to access? Can you access it in real-time and/or in batch mode?

-

Who can access and who can approve the access?

-

Can it be combined with other enterprise data? If yes, then how?

-

How is the product priced for internal and external users?

When customer data has everything mentioned above, anyone in the enterprise or outside (if applicable) can use it as a product. Every time someone needs customer data, they don’t have to go to the data team to create or get it. This is a big step forward from just treating data as an asset.

Customer data becomes a self-contained product, has product specification, pricing structure, life cycle management, and works for the benefit and delight of customers.

A data mesh architecture will help you build multiple data products and link them together. It will improve agility for enterprises to respond to a fast-changing market while enhancing the quality, making data usable, increasing reusability, reducing data duplication, and increasing trust. Do more for less. If data is the new oil, you need to treat it as a product.

Zensar’s data engineering and analytics combined with experience-led engineering is well-positioned to bring velocity for your enterprise to ideate, design, implement, and launch data products.